Quality of Service

QoS is the art of controlling how traffic flows through a device. This is done by prioritising packets, applying bandwidth limits and defining in what order a device should transmit the packets. However, this is often seen as a somewhat dark art and often disregarded or kept to the defaults. Given I’m interested in how network devices internally schedule their packets understanding how QoS works is the most important thing to get a good grasp on how a network device works internally.

Buffering

Any network device buffers packets before it transmits them to the egress port. For this, there are three possible strategies. 1

Queing implementations

There are three ways to buffer packets, Naive buffering, two-stage buffering and virtual output buff. Each has its advantages and disadvantages. Each of the methods can be used for one part in the device. For example CIOQ for the ports and VOQ for the fabric.

Naive queuing

With input queuing (IQ) each ingress port has a buffer where all packets received are stored. Each packet can only leave the buffer in a First In First Out order. The downside of this approach is that if a port receives a packet that is destined for an egress port that is full, everthing behind that is also stuck waiting. This is the easiest to implement.

two stage queing

Combined Input-Output Queuing (CIOQ) is the method where both the Ingress side and the Egress side buffers packets. If a packet arrives at the ingress port, it gets stored inside the buffers, processed and then send to the queue of the output port where then the output port processed the packet again and forwards the packet again. This setup offers the most flexibility but is also quite expensive to implement, given one requires two times the amount of buffers and two times the amount of memory bandwidth on each forwarding block. A packet is stored, and retrieved two times, both on ingress and egress.

VOQ

In Virtual output queuing, packets are only buffered on the ingress side. If a packet arrives on ingress it is processed and stored in the buffers at ingress with a tag for what output queue it is. When the output port has room to transmit a packet it retrieves it from the ingress buffer and directly sends it outwards on the wire. This is a simpler design than CIOQ and cheaper to implement. Nevertheless this loses flexibility given fewer queues available to do complex classification.

Examples

For example, Juniper MX routes use CIOQ with VoQ for access to the fabric, but a Broadcom Jericho or Juniper PTX device uses end-to-end VoQ only.

Queing

Most network devices nowadays offer eight output queues, on ingress traffic can be classified and mapped to one of these eight queues. Where in general queue 7 is the highest priority and queue 0 is the lowest priority. The device will loop through queue 7 till 0 for each port looking for packets.

Most devices partition the queues into two types Strict-High and low. The scheduler will first empty all queues marked as Strict-High before it will take look at the lower queues. As soon as a packet arrives at a queue marked as strict-high it will get transmitted directly.

Each queue can be given a bandwidth limit. It will serve each queue until this limit if the port is fully saturated, if there is excess bandwidth available it will still transmit the packets. For example queue 7 is Strict-high with a bandwidth limit of 10Mbit. It will transmit packets to reach the 10Mbit and then switch to the other queues, depending on the vendor if there is still room available it will then transmit the rest of the packets out of the strict-high queue or those packets are dropped. To prevent queue starvation is good practice to limit Strict-high queues with a bandwidth limit. Each queue can be given a bandwidth percentage. An example, queue 5 will get 80%, queues 6 and 7 will get 10%. This means that the scheduler under congestion allocates 80% of the bandwidth to queue 5 and 10% to 6 and 7. Yet, if there is only traffic through queue 7, it will still be able to use all available bandwidth minus the packets on the strict priority queues.

Classification

Now we know how traffic gets forwarded from each queue the next question is how do we classify packets so they get put in the specific queue? 802.1Q headers and IP headers have the option to specify the priority of the traffic for 802.1Q this is done with 802.1P CoS values and for IP this is done via ToS, DSCP and ECN.

Class of Service

CoS gives us inside the VLAN tag 3 bits to mark a class from 0 to 7 and one bit to mark if a packet is eligible for dropping. Normally packets marked with a CoS of 0 are mapped to queue 1 and packets with a CoS of 1 are mapped to queue 0, all the other ones are mapped 1 to 1.

| CoS | Queue |

|---|---|

| 0 | 1 |

| 1 | 0 |

| 2 | 2 |

| 3 | 3 |

| 4 | 4 |

| 5 | 5 |

| 6 | 6 |

| 7 | 7 |

DSCP

DSCP uses 6 bits inside the DS field in IP packets, the other 2 bits are used for ECN, which is used to signal if there is congestion. This is separated into the following 4 classes, Best effort, Expedited Forwarding, Assured Forwarding and Class selectors which are backwards compatible with ToS. rfc4594 goes into detail about what the recommended configurations are for DSCP configurations. Again we map DSCP values to different queues and given we have more bits it also classifies the drop probability from low to high.

Filters

Besides using the already set values inside a packet, one can also use filters to classify specific traffic into specific queues. For example traffic with a specific source port gets mapped to the queue for telephony traffic, or TCP ack packets get a higher priority.

Congestion mangement

Now we know how we can classify and queue packets, the question is what to do when a port or queue is congested. This depends on the support of the hardware. Each interface gets N ms of buffer dedicated. When this buffer is full new arriving packets will get dropped. The question is how do we decide what packets get dropped and when.

Tail Drop Congestion

With tail drop congestion we specify the percentage of the queue where start drop packets that are marked as high drop probability. If the queue is full all packets that arrive are dropped.

Random Early Detection

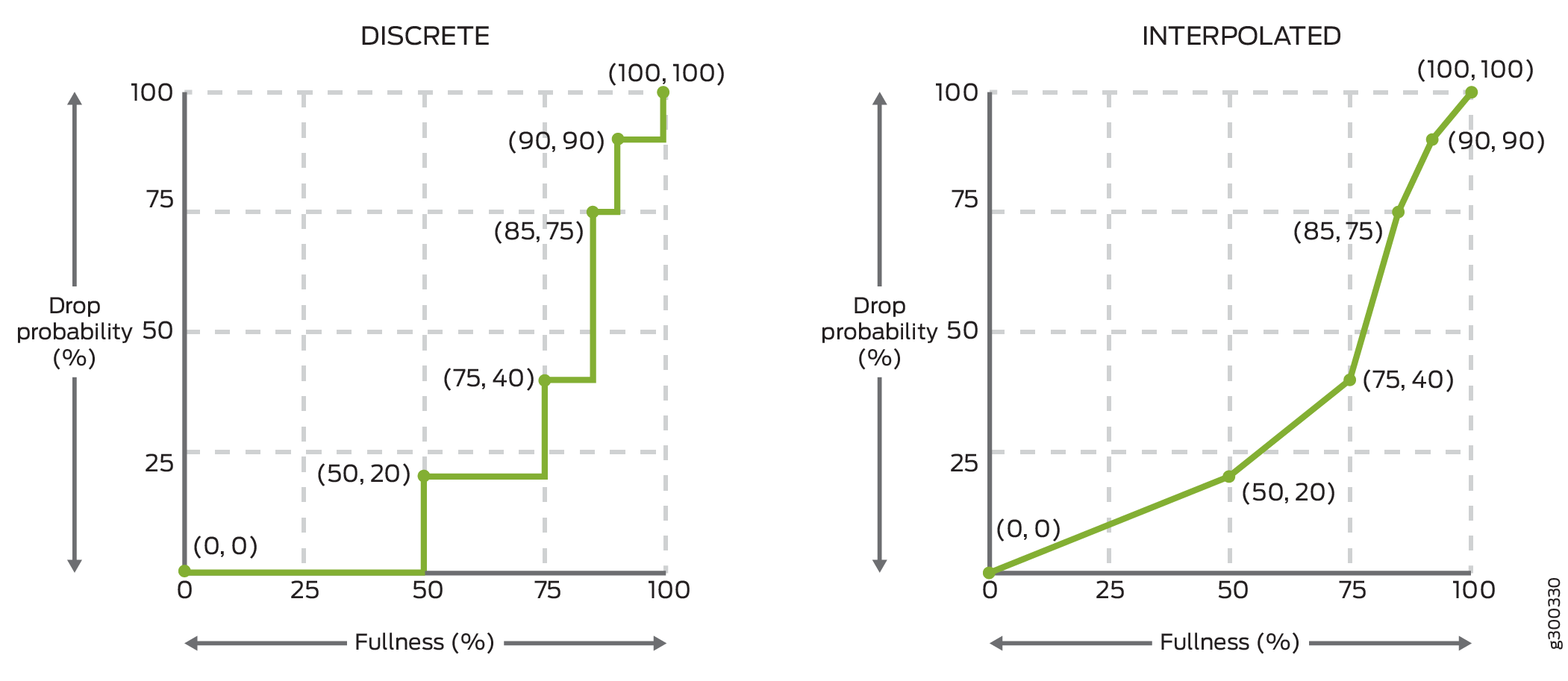

With Random Early Detection (RED) you define a graph with on the X axis the queue level, and on the Y axis the drop probability. When a packet gets transmitted or placed in the queue it is checked against this graph. Based on the resulting drop probability it is dropped or transmitted. One can define a graph with hard steps or let the device interpolate the values between the defined points.

Weighted RED

The difference between Weighted RED and RED is that one can define one graph per drop probability instead of one fixed graph for the whole queue so that packets marked with a higher drop probability will get dropped earlier than other packets.

Conclusion

This should give you a basic understanding of the different parts that make up QoS inside a network device. In a future post i will cover H-CoS where more advanced kinds of shaping and queuing is possible with the introduction of a hierarchy.

-

https://archive.nanog.org/sites/default/files/wednesday_tutorial_szarecki_packet-buffering.pdf ↩